Best paper award for a robot that can see and move transparent objects

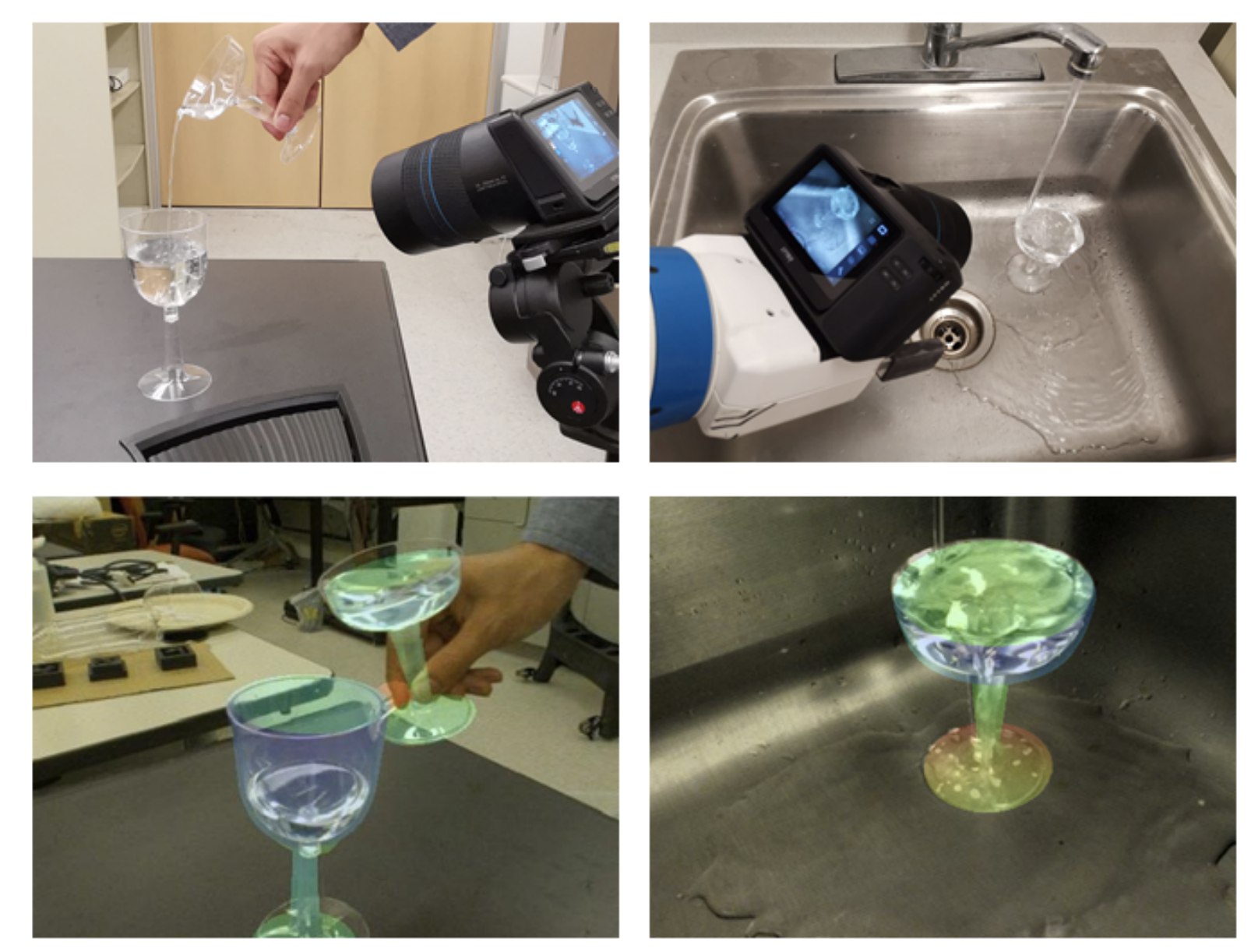

A new method enables robot arms to build a tower of champagne glasses.

Enlarge

Enlarge

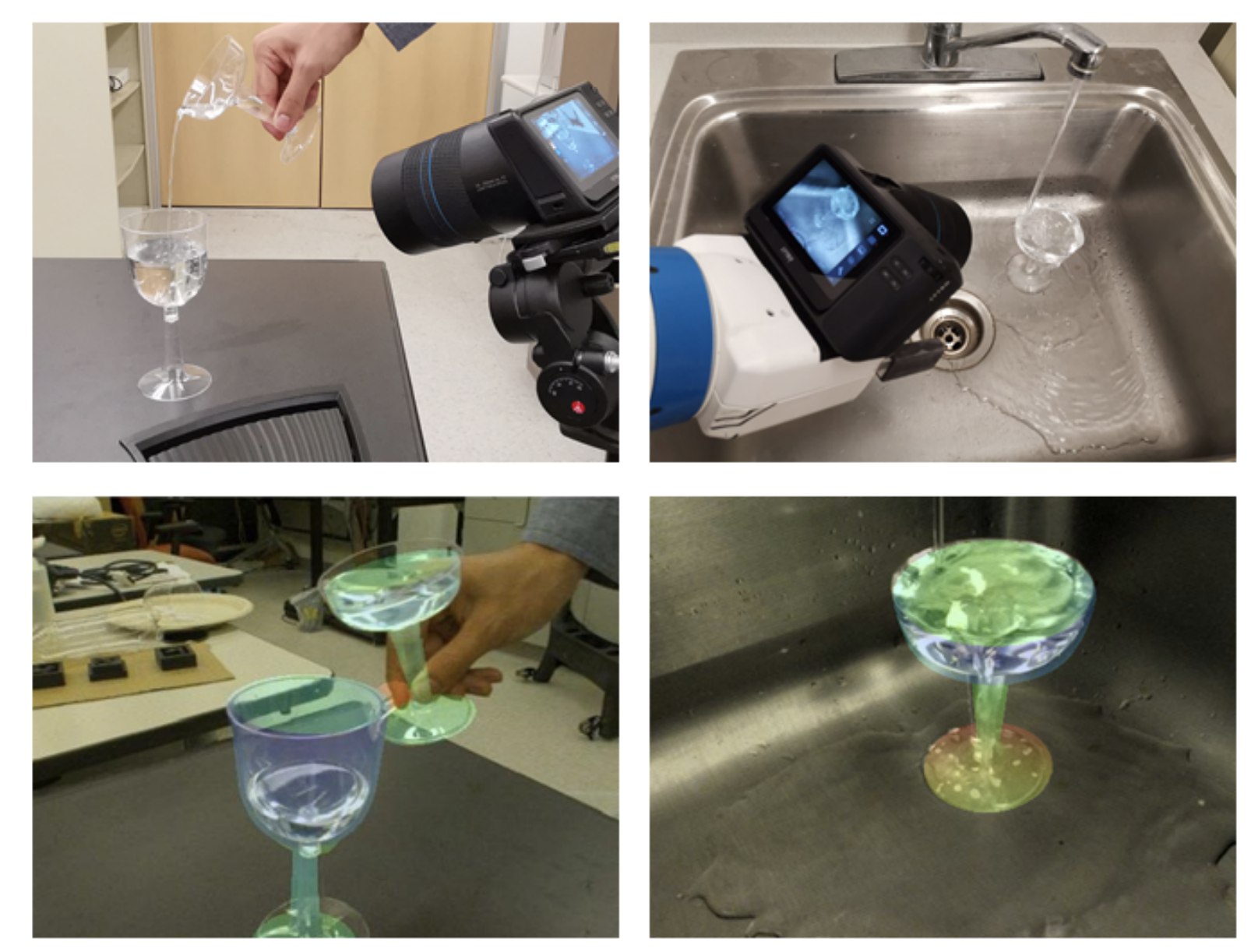

In an important step toward dexterous, perceptive robots, the Laboratory for Progress led by Prof. Chad Jenkins has developed a new method to help the autonomous machines see and manipulate transparent objects. The project, called “LIT: Light-Field Inference of Transparency for Refractive Object Localization,” was recognized with a 2020 Best Paper Award for the IEEE Robotics and Automation Letters Journal.

Translucency is everywhere around us, and many of the objects we deal with day to day or in specialized settings are fully or semi-transparent – glasses, containers, clear liquids, and more. This transparency offers unique challenges when it comes to perception and manipulation, and computer vision models faced with transparency are especially sensitive to changes in lighting, background, and viewing angle.

Imagine reaching for glass containers sitting against either a bright background or a dark background. The nuanced understandings that occur automatically while a human makes sense of these complicated visual scenarios have to be understood and modeled to apply to a machine.

LIT, whose design was led by Zheming Zhou, Xiaotong Chen, and Jenkins, offers a two-stage method for dealing with these transparent objects. It makes use of a technology called light-field sensing, which accounts for both the intensity and the direction of light rays in a scene as they travel through space. Unlike traditional camera sensors, which only account for light intensity, this enabled the researchers to follow the shapes of transparent objects as the light distorted around them.

Enlarge

Enlarge

In their study, the team trained a neural network on photorealistic, synthetic images from a custom light-field rendering system for virtual environments. They found that a network trained on these large, elaborately designed synthetic images can achieve similar performance to those trained on real-world data.

This method allowed the researchers to additionally produce ProgressLIT, or ProLIT, a light-field dataset for training neural networks on transparent objects recognition, segmentation, and pose estimation. The dataset contains 75,000 of their synthetic light-field images, as well as 300 real images from a light-field camera labeled with segmentation and 6-D object poses.

The model was shown to be effective, allowing robotic arms to build a champagne tower in a clean, sparse environment. Further work will explore how the technique can work in more cluttered environments, where even opaque object segmentation becomes more difficult.

The award was presented on June 2 at the IEEE International Conference on Robotics and Automation.

MENU

MENU