Researchers to use brain scans to understand gender bias in software development

The team will use fMRI to identify some of the underlying processes that occur when a code reviewer weighs in on a piece of software and its author.

Enlarge

Enlarge

Despite the computerized appearance of its products, software development is, in the end, a human process. Humans write code and fix bugs, and humans review that code and determine its correctness. The code review step, long formalized across industry as a means to discover defects before a piece of software is shipped, puts a human face to face with a technical product and has them critique it on its technical merits.

But is that all that happens?

When a person sits down in front of a piece of software, they’re sitting down as an individual with biases and prejudices looking at the work of another person. What happens when the code author happens to be someone the code reviewer sees by default as incapable?

A 2017 study explored this situation, reporting the alarming discovery that the code author’s gender had a significant impact on the code reviewer’s approval or rejection rate. In fact, women’s contributions were rejected more often when their gender was identifiable to the reviewer, and accepted more often when their work was anonymous.

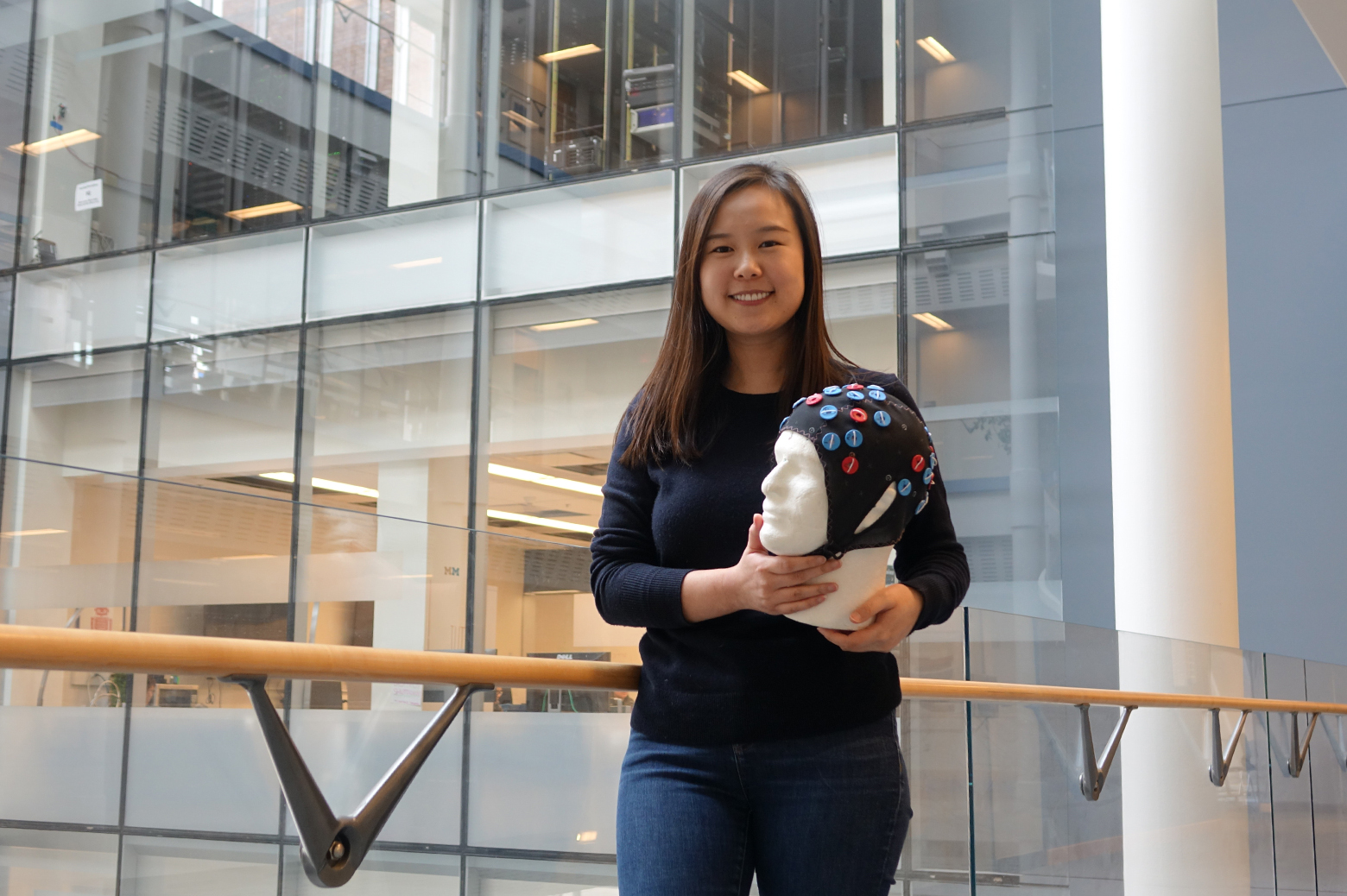

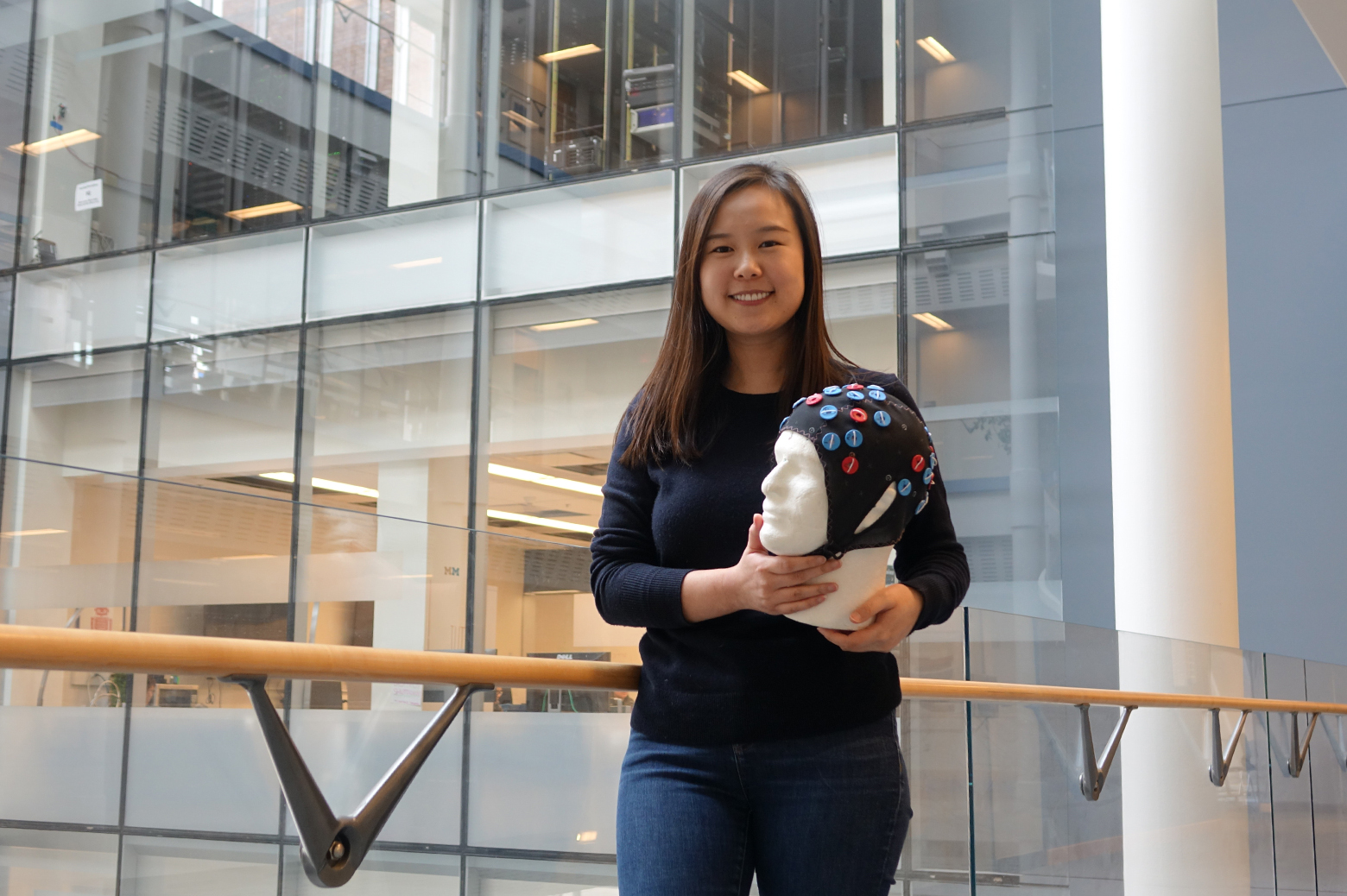

An interdisciplinary team of researchers at the University of Michigan is undertaking a study to test these observations and identify some of the underlying processes that occur when a code reviewer weighs in on a piece of software and its author. With funding from a Google Faculty Research Award, Prof. Westley Weimer and PhD student Yu Huang will use medical imaging and eye tracking to better understand how gender bias impacts decisions made during code review.

Bad practices during code review can negatively impact everyone involved in the software development process. Having work unfairly rejected reflects badly on the code authors, adding another dimension to the discussion of gender inequalities in the field that is beyond the author’s control. In addition, careless rejections like this cost companies unnecessary time and money revisiting functional code, while careless approvals can lead to bugs and broken code slipping into distribution.

“In code review you should just be focused on the quality of the code,” says Huang.

But several studies indicate this is indeed not what happens in practice. Unfortunately, to date the research on this subject has been observational rather than experimental, and the results don’t do much to explain the underlying thought processes that lead to these biases impacting the code review process. Does the code reviewer simply make their decision as soon as they identify the author’s gender? Or do they determine that they’ll spend less time and effort on code submitted by women or men?

“Existing studies on this phenomenon have been limited by their lack of control over users and setting,” Huang explains. So the team is setting up what may be the first experimental code review study that controls exclusively for the gender of the author.

The study itself will incorporate medical imaging (functional MRI) and eye tracking, which can tell a more complete story of the reviewer’s thought process when used in combination.

“Medical imaging will help us visualize which regions of the brain are actually being used in code reviews,” Huang says, “and eye tracking tells you what information they are reading to cause that brain activity.”

The different pieces of code up for review will all be exactly the same across participants, with only the identify of the author changing. Other neuroscience studies have determined that certain regions of the brain are associated with biased thinking. So if a participant’s eyes linger on the author’s profile for a number of seconds and activity spikes in these regions, there’s reason to scrutinize the decision of their review.

The group has already completed a preliminary study for the project’s first paper, in which they measured the amount of time a code reviewer spends on a piece of code based on the gender of its author. These early findings indicate that people spend less time reviewing the code when the author is a woman, though until they dig deeper with the fMRI they can’t say conclusively if that’s a consequence of rushed, thoughtless work.

Whatever the outcomes, the study will offer some first-of-their-kind insights to the code review process.

“This is the perfect time for us to combine those techniques,” Huang says, citing recent advances in medical imaging technology and the growing body of work in the field of neuroscience. “We couldn’t do this ten years ago at all.”

MENU

MENU